The digital era has rapidly evolved with a plethora of innovations and new technologies. A decade ago, humans couldn’t create images in a few seconds as per the requirement. Today, with the help of various AI tools, we can create AI-generated images that reflect our own vision, creativity, and the right prompt. This transformative approach in the digital world has seamlessly made our lives easier, enhanced the quality of work, and accelerated the speed of work.

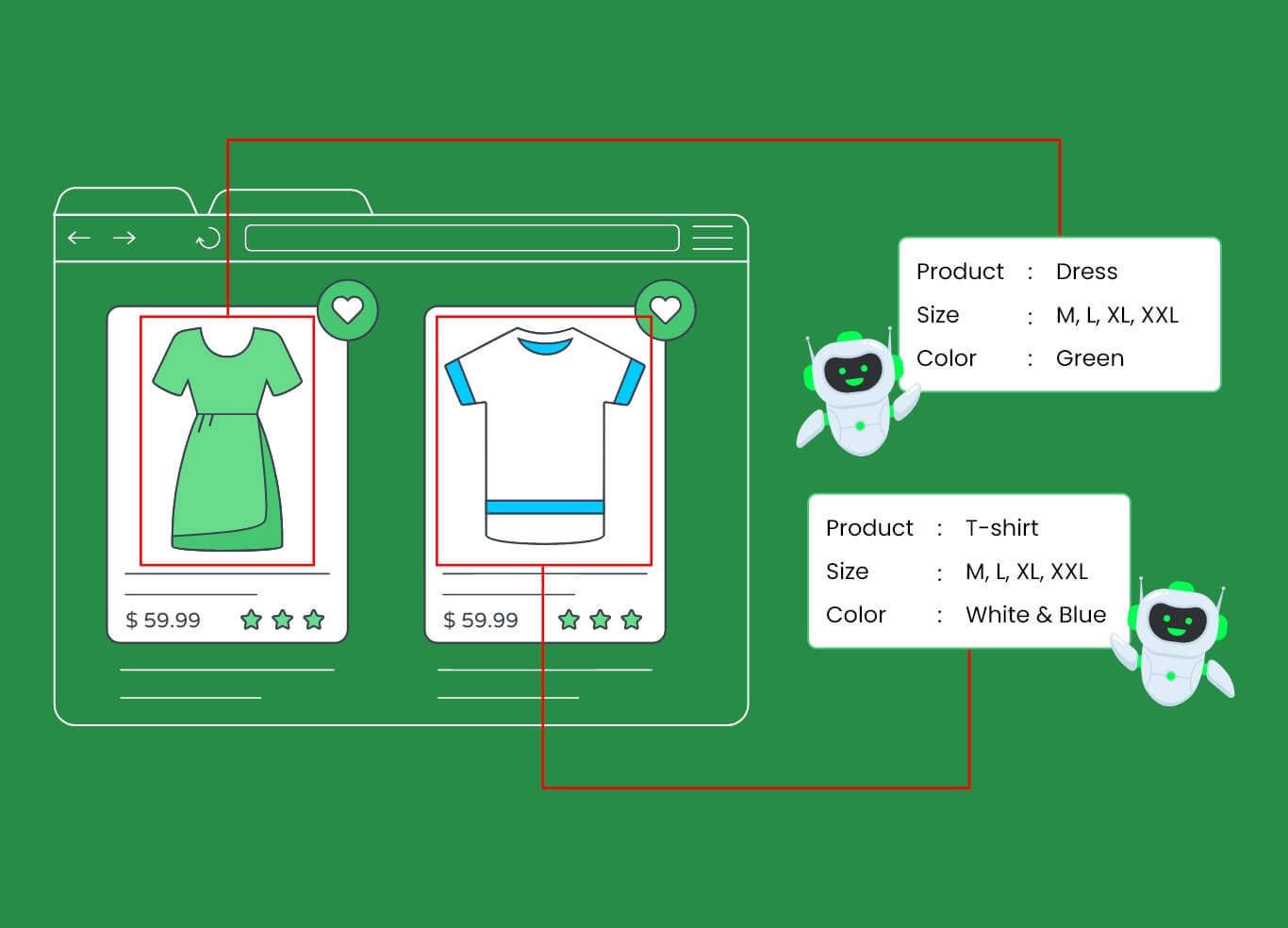

Not only for image creation, the use of artificial intelligence has spread like wildfire across various industries, such as healthcare for quick disease detection, the automobile industry for self-driving cars, the ecommerce sector for suggesting and providing an accurate look and feel of products, surveillance and security for detecting different objects, and more.

The use of AI can be witnessed in tasks ranging from small to large, complex processes, simplifying them into easier processes with precision and efficiency. Today, if you ask ChatGPT or Google Gemini about a subject, a brief summary of the question is available. Ever wondered how this software or application quickly offers detailed insights into the prompt and is absolutely correct? Well, to begin with, it is a complex answer involving critical processes; however, the overall brief could be summed up in one word: “data”.

In the modern landscape today, data plays a key role. For every business, regardless of its nature, size, or type, data provides deeper insights when utilized in a significant and novel way. Not only this, but businesses from a variety of industries leverage the power of data to make informed decisions, enhance productivity, stay competitive, and streamline operations.

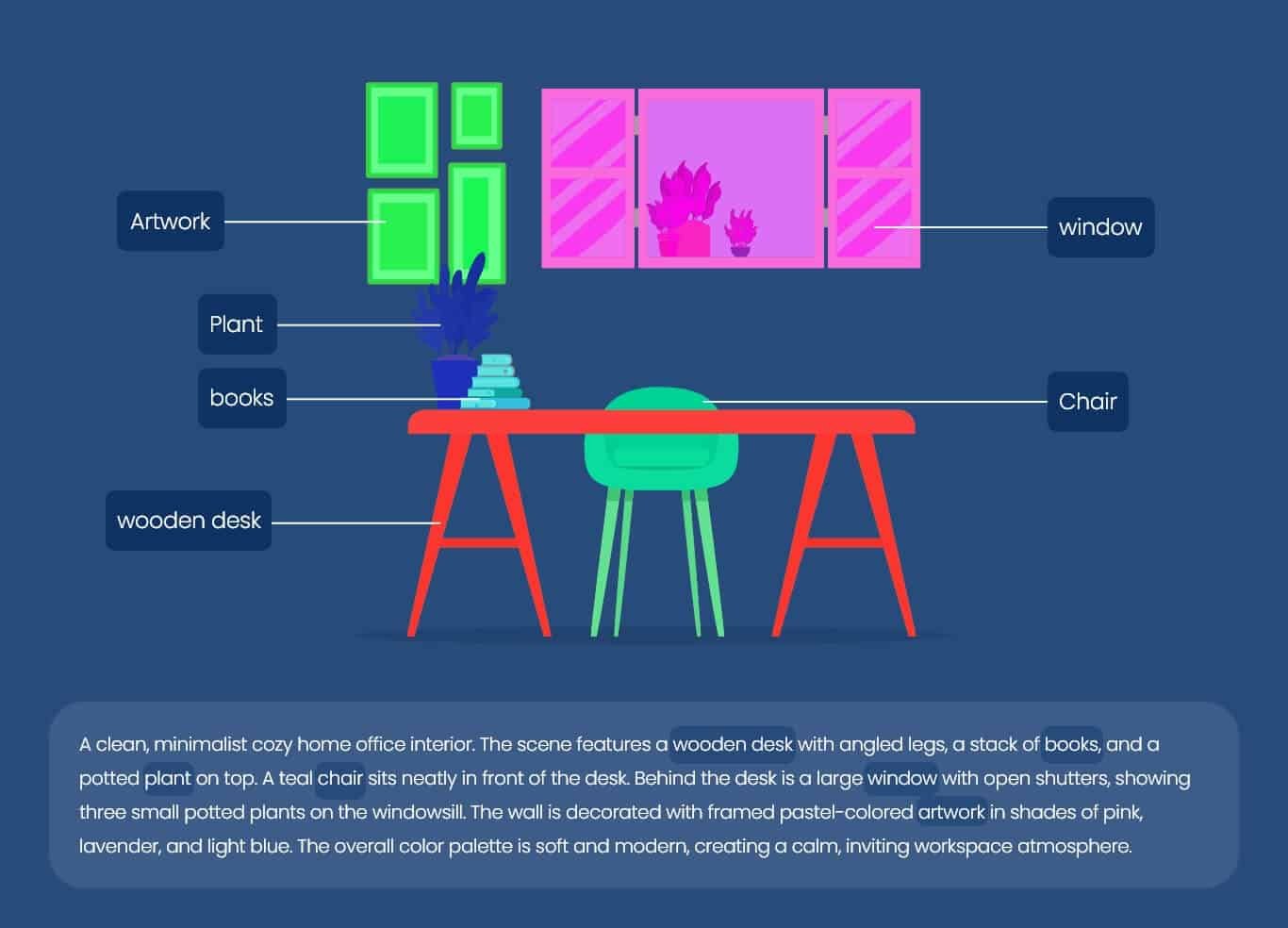

While data is key to success, it is essential to utilize high-quality information to gain insights and make use of data for different purposes. Raw data cannot bring valuable insights and can lead to erroneous, insignificant, and inaccurate results. Therefore, to ensure the use of data, it is evident to maintain its high quality. Speaking of hi-tech and innovations, we have the power to access any information in seconds or create any visual imagination accurately, which happens with the right data annotation, especially for LLMs (large language models). It is a process of AI data labeling that enables AI models to detect objects, information, and details. Moreover, it is a significant part.

Efficient LLM with Accurate Data Annotation

Understanding Data Annotation in Brief

The era we all live in is digitized, quick, efficient, and high-quality. Initially, the push in digitalization has led to a variety of innovations, resulting in advanced AI systems and applications. Artificial intelligence has been a significant part, quietly handling various aspects. Today, in the evolving landscape, the role and significance of AI have grown exponentially.

The creation of unique images and the rapid retrieval of information from AI have not only advanced but also made lives easier. If we look around today, everything we use has AI built in: Siri, earphones, ecommerce sites for quickly recognizing images, chatbot interactions, Televisions, advanced glasses, VR applications, and more.

All these items, articles, or applications have AI that enhances outcomes and provides quick responses. This quick response or result enhances customer experiences by enabling simple tasks without requiring a technical approach. However, the question is how artificial intelligence brings accurate results each and every time. AI is fed massive data, categorized into different sections. For example, under animals, cats, dogs, cows, horses, and elephants are tagged as four-legged animals. On the other hand, vehicles such as cars, trucks, bikes, trailers, and more are categorized differently.

This helps AI models understand and identify objects through accurate and efficient AI data labeling processes. In the world of AI, ChatGPT, GeminiAI, and other AI applications are leading today’s landscape. As a matter of fact, by tapping into the potential of artificial intelligence, AI developers and researchers aim to enhance dynamic communication capabilities and move beyond the limitations of automated chatbots.

AI tools like ChatGPT and other renowned applications require high-quality data to feed into AI. Here, the role of data annotation services comes to light, as it allows for categorizing information into meaningful attributes. For large AI applications, data annotation is a crucial ingredient for successful and unbiased outcomes. In simple words, data annotation, often referred to as data labeling or tagging, is a significant process in developing AI models.

Data annotation for LLMs is a critical process of attaching meaningful labels, tags, or attributes to raw, unstructured data (like images, videos, text, or audio). This process transforms the raw data into structured, annotated data that machine learning (ML) models can understand and learn from. It is the foundation of supervised learning, as it provides the “ground truth” that the algorithm uses to identify patterns and eventually make accurate predictions on new, unlabeled data. Audio annotation and video annotation services are segments of data annotation that help the model learn and interact seamlessly with users. As data annotation is vast and consists of a variety of aspects, here’s a breakdown of AI data labelling for better understanding.

Data Annotation Factors

Enables Supervised Learning

Ensuring Accuracy

Model Training

Creating Ground Truth

- Enables Supervised Learning: ML models in supervised learning require examples of input data paired with the desired output. Annotation provides this pairing.

- Model Training: The annotated data serves as the training set, allowing the ML model to learn to associate specific features or patterns with the correct labels.

- Ensuring Accuracy: High-quality, consistent annotation directly leads to high-performing, accurate ML models.

- Creating Ground Truth: The labels created by human annotators are considered the “truth” against which the model’s predictions are measured and evaluated.

Video annotation services and audio, along with various types of data labeling, encompass the aspects mentioned above, resulting in an improved AI model. Nonetheless, being a complex and critical aspect in the digital world, developing an AI model demands expertise, knowledge, the right use of technology, and the ability to handle complex databases.

One of the holistic solutions is to outsource data annotation services for impactful LLMs, fostering success in the model. Moreover, LLMs (large language models) are a groundbreaking technology that has significantly altered how users search. Let’s delve into LLM and how data annotation in large language models is a crucial approach.

Brief Insights for Large Language Models

As discussed, data annotation has become a significant aspect for advanced tech models. Artificial intelligence use can be seen promptly in a variety of industries, from ecommerce to education. Every sector leverages advanced tech for a competitive advantage. In the landscape, advanced technology AI is a key player, almost present everywhere. For giant tech companies like Microsoft, Meta, Google, and others, large language models are key aspects of innovation, automation, and enhancing the user’s perspective with simple processes.

Let’s take it, for example: ChatGPT is an open-source tool that uses OpenAI’s GPT (generative pre-trained transformers) to understand and complete tasks within seconds. In simple terms, LLM is an advanced AI data labeling tool where the AI algorithm is fed and trained with massive databases for content generation, translation, analysis, creation, summarization, and more. Moreover, as the technology and innovation advance, audio annotation services will soon become a significant part of LLM.

With the emergence of a large language model, AI has gained popularity among the masses due to its capability to understand and generate human-like text. Leveraging the power of LLM, it is evident that harnessing data annotation by a team of experts is beneficial. Data annotation outsourcing services are essential for high-quality datasets and ensuring precision.

Since large companies look for novel ways to annotate, tag, and label data, outsourcing to experts is a comprehensive solution. Outsourcing service providers have a team of experts who ensure improved LLM accuracy, efficiency, and reliability for better results. Not only this, but LLM offers various benefits, including versatility, a wide range of tasks, content creation, summarization, and more. This helps to increase efficiency and productivity while eliminating repetitive language tasks. Adopting large language models brings a variety of advantages, such as;

- Versatility

- Increased efficiency

- Enhanced data analysis

- Improved customer experience

- Reduced data training

- Content generation

These are some of the benefits that showcase the importance of a large language model. To ensure and reap all the benefits, the data annotation of large language models is significant. For an AI model to complete the task, AI data labeling is a crucial aspect. Explore in detail how data annotation for LLMs is crucial.

Data Annotation for Large Language Model

As briefly discussed, LLM has become an essential aspect of the evolving landscape. The expanding scope of technology has led to the adoption of cutting-edge approaches that enable users to achieve the most accurate outcomes. As an essential aspect of the market, achieving precise outcomes, large language models’ data annotation is not only crucial but also a non-negotiable process for developing successful, result-oriented, and accurate AI LLMs. In other words, data annotation for LLMs includes labeling, tagging, and attributing large volumes of data to create high-quality, well-trained, and precise databases. This enables AI models to understand, identify, and generate accurate and unbiased responses. As a matter of fact, AI data labeling for LLM needs to be efficient to achieve effective results.

Data annotation can be manual or even automated; however, it demands expertise, experience, and knowledge. Hence, data annotation outsourcing services are a holistic approach for getting improved overall performance. Not only this, but data labeling or tagging is significant for large language models for various reasons, as follows;

Major Reasons for LLM Annotation

Accurate data

Mitigates bias

Efficiency and accuracy

Enhance specific task

- Accurate data: One of the primary reasons to adopt data annotation services is to ensure the database is properly aligned, organized, and structured, serving as a reference point for the AI model to understand and learn patterns, identify, and make predictions.

- Efficiency and accuracy: AI data labeling services enhance the model’s ability to perform according to the prompt and deliver accurate outcomes for users.

- Mitigates bias: ChatGPT, Gemini AI, and other large language models need to provide unbiased responses to ensure users receive the right answers. Consistent, clear, and precise data annotation services allow LLM models to avoid biased solutions for results.

- Enhance specific task: Any LLM model is trained for tasks such as generation, information extraction, summarization, creation, and more. To ensure these tasks are accurately performed, data annotation or tagging of the information must be precise and of high quality.

These are the significant reasons why data annotation is crucial for LLM and its success. Incorrect information or unhealthy data quality can manipulate the overall performance of a large language model. As a matter of fact, these models are constantly updated with the latest information. LLM offers real-time details of the real world. Data annotation for LLMs consists of various methods that yield accurate results and help models perform effectively. The following are a few methods out of many;

- Automated automation

- Hybrid method (human annotator and automation)

- Active learning

- Manual annotation

The above methods are a few of the right data annotation approaches to get accurate outcomes from the model. However, the requirement of expertise, knowledge, use of the right technology, understanding of the process, ability to handle complex databases, and more key factors are necessary for successful data annotation. Therefore, big firms from the tech sectors keep looking for novel ways to get accurate results. Data annotation outsourcing services play a key role for firms and other LLM developers in obtaining high-quality information.

Moreover, this enhances quality, streamlines processes, allows businesses to focus on core activities, and saves costs and time, boosting productivity and more. Improving LLM accuracy by outsourcing data annotation service providers allows businesses to obtain quality databases for training LLMs.

Uniquesdata is a leading provider of data management services, offering a wide range of data annotation services at cost-effective pricing plans. With many years of experience, a team of professionals, and cutting-edge technology, UniqueData aims to deliver precise and efficient results.

Final Thought

LLM has rapidly become a significant part of the market, enabling it to lead the world and remain competitive. Data annotation outsourcing services enable big tech firms to obtain accurate information for feeding their AI models with labeled data.